The $200B Insight Hidden in Plain Sight

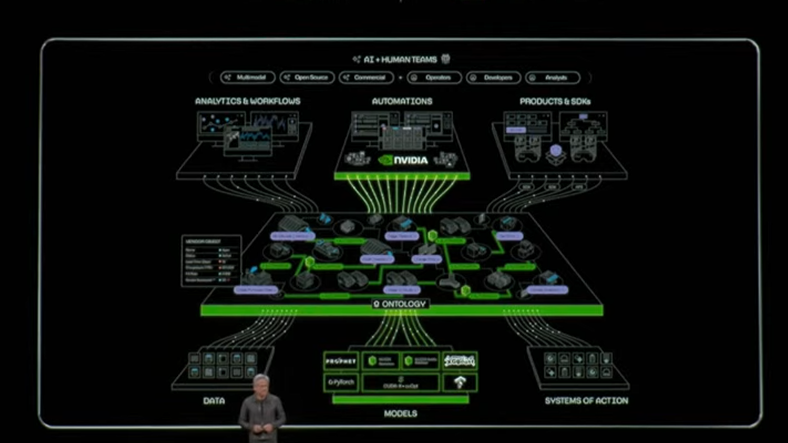

Nvidia and Palantir just announced a new partnership to build an integrated AI stack, with Palantir's Ontology at its core. In its coverage of the news, the tech press has treated ontologies like some breakthrough innovation.

It is not. The semantic web pioneers saw this development coming twenty years ago.

The Problem Nobody Talks About

Every enterprise must deal with ambiguity. Your "customer" is my "client" is accounting's "revenue unit." Humans handle this fine; we are context-switching machines. Machines are not. They need explicit relationships and unambiguous semantics. When Palantir promises "context-aware reasoning" through their Ontology framework, they are admitting what we have known all along: throwing compute at messy data will not solve the fundamental problem of meaning.

I learned this lesson while building a pricing ontology for Deepgram's transcription services. Pricing should be a simple matter, right? Base rates, volume tiers, feature add-ons, time calculations, and dozens of edge cases some salesperson promised three years ago. Try explaining that to a database. Now try explaining it to an AI that needs to optimize costs.

With an ontology, you only need to define these relationships once. A TranscriptionRequest has cost components. Components have units and measures. The relationships are explicit, inheritance is clear, and your AI can reason about pricing like your CFO does. The pricing ontology easily performs double duty as a pricing engine. With my expertise and experience in developing pricing engines I can show clients how Teaspoon's transcriptions services will save them money when switching from Deepgram.

Why It Took This Long

The semantic web crowd built the entire stack in the early 2000s: RDF, OWL, SPARQL. But the world was not ready for it. We were still fighting over XML versus JSON. Cloud computing was nascent. Most businesses did not have enough digital operations to require semantic precision.

Now Lowe's uses the Nvidia-Palantir stack to create a digital twin of its global supply chain, thousands of suppliers, millions of SKUs, billions of relationships. Good luck managing that with PostgreSQL and Python scripts!

William Kent called it in 1978. In his book, "Data and Reality," he explained why the world does not fit in tidy tables. Entities have fuzzy boundaries. Relationships are messy. Categories overlap and mutate. SQL forces us to pretend otherwise. We build pristine schemas, then spend decades patching them as reality laughs at our models.

The Setup That Ships

Here is what works: three systems in sync.

First, a domain expert who knows the business. Second, an AI agent that parses documents and proposes relationships at superhuman speed. Third, a reasoning engine like Hoolet that runs formal proofs and catches logical contradictions before they hit production.

When I built that pricing ontology, we had an AI talking directly to Protégé, the open-source ontology editor powering knowledge systems since the 90s. The AI proposes "volume discounts apply to base transcription rates." The reasoner checks if this breaks anything. The human confirms or tweaks.

The reasoner delivers mathematical certainty about logical consistency, unlike an LLM's probabilistic guesses. Each cycle gets closer to ground truth. The prover catches contradictions that would cascade into disasters. The AI learns from corrections. The human user ensures we are modeling reality, not fantasy.

What Happens at Scale Without Semantics?

Most enterprise AI initiatives, unfortunately, are headed for a cliff.

You can hit 80 percent accuracy with raw compute and clever prompting, even 95 percent on narrow tasks. But when you scale that across an organization with hundreds of agents making thousands of decisions with regard to procurement, operations, compliance, and customer service, and complexity will abound, entropy will explode, and the organization will implode.

One agent thinks "high-priority customer" means one thing. Another disagrees. Marketing's "qualified lead" differs from sales'. Finance's cost allocation does not match operations'. Nobody notices until the errors compound into million-dollar mistakes or regulatory violations.

This is not hypothetical. This is where many companies are headed.

A well-constructed ontology crystallizes truth. Not the flashy kind, but the pure carbon lattice kind: immutable, clear, mathematically precise. Your business logic stops being scattered across a thousand microservices and Excel sheets. It lives in one formal structure that every AI agent can query and trust.

The Market Will Force This

Soon, AI systems without ontological foundations will be unmarketable, like apps without security audits. Not because of trends, but because the first wave of production AI will produce enough expensive failures that procurement teams will start asking:

"How do your AI agents ensure semantic consistency?"

"Can you prove your reasoning is logically sound?"

"What happens when your AI makes contradictory recommendations?"

Companies that cannot answer these questions unequivocally will not win enterprise contracts. Other companies, the ones building on ontologies, will eat their lunch.

The Window of Opportunity Is Open

The semantic web pioneers built the tools. Nvidia brought the compute. Palantir proved the enterprise value. The infrastructure exists. The business need is screaming to be filled. And most of your competitors still think "ontology" is philosophy.

Companies moving forward with ontologies now will lock in an advantage that will be nearly impossible to replicate. Good ontologies require deep domain knowledge, organizational alignment, and months of iteration. You cannot hire your way to this. You cannot copy-paste it. You must build it.

The semantic revolution is not coming. It is here, running on Nvidia GPUs, powered by decades-old standards that suddenly matter more than anything else in AI.

There is only one question: Will you be explaining to your board why you moved early, or will you have to explain why you did not.

Interested in AI-Powered Solutions?

Let's discuss how we can help you build scalable, voice-powered products.